Generative AI in Penetration Testing: Exploring Tools and Anonymization Challenges

Generative AI is revolutionizing penetration testing with automation, scripting, and reporting. This research explores AI-driven pentesting tools and anonymization challenges.

Introduction

Generative AI is rapidly transforming penetration testing by offering automation, intelligent guidance, and scripting capabilities. AI-driven tools can streamline reconnaissance, exploit development, and reporting, significantly improving the efficiency of security professionals. However, leveraging AI for security testing comes with challenges, particularly around data privacy and anonymization.

Spark42 Research Group explores existing AI-driven penetration testing tools, with a dive into GreyDGL/PentestGPT and hackerai-tech/PentestGPT. Additionally, we discuss key anonymization considerations for those using public Large Language Models in penetration testing.

1. GreyDGL/PentestGPT: AI-Assisted Penetration Testing

Capabilities

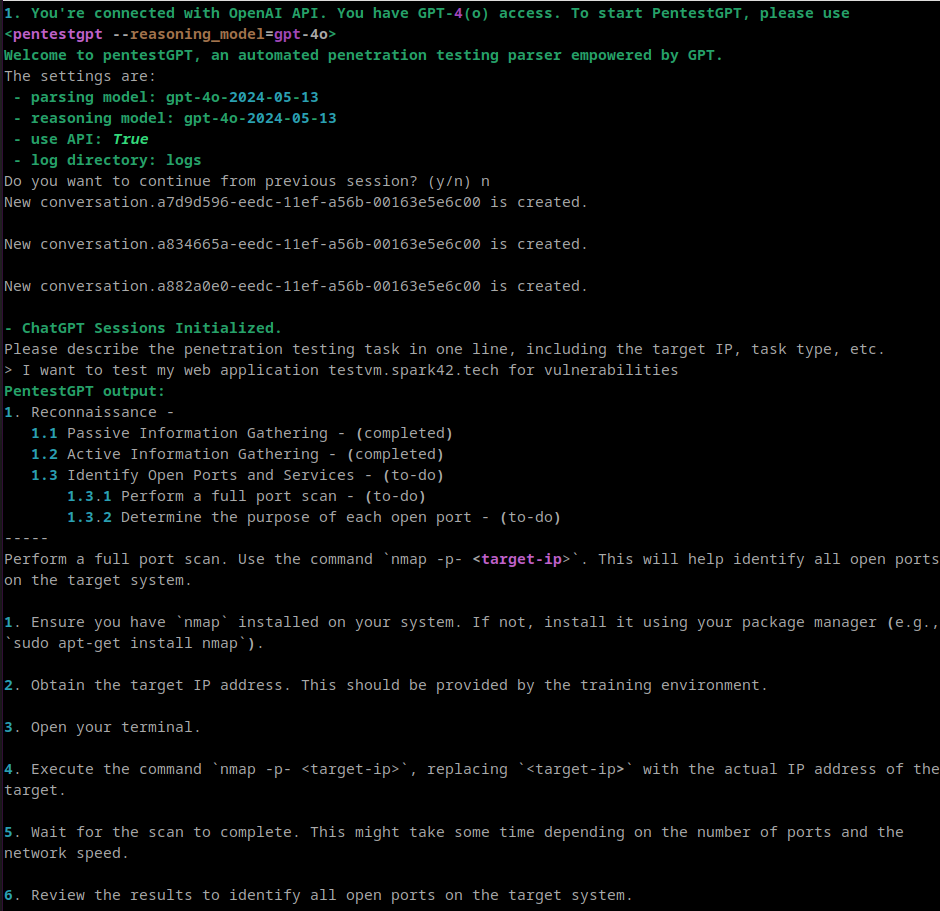

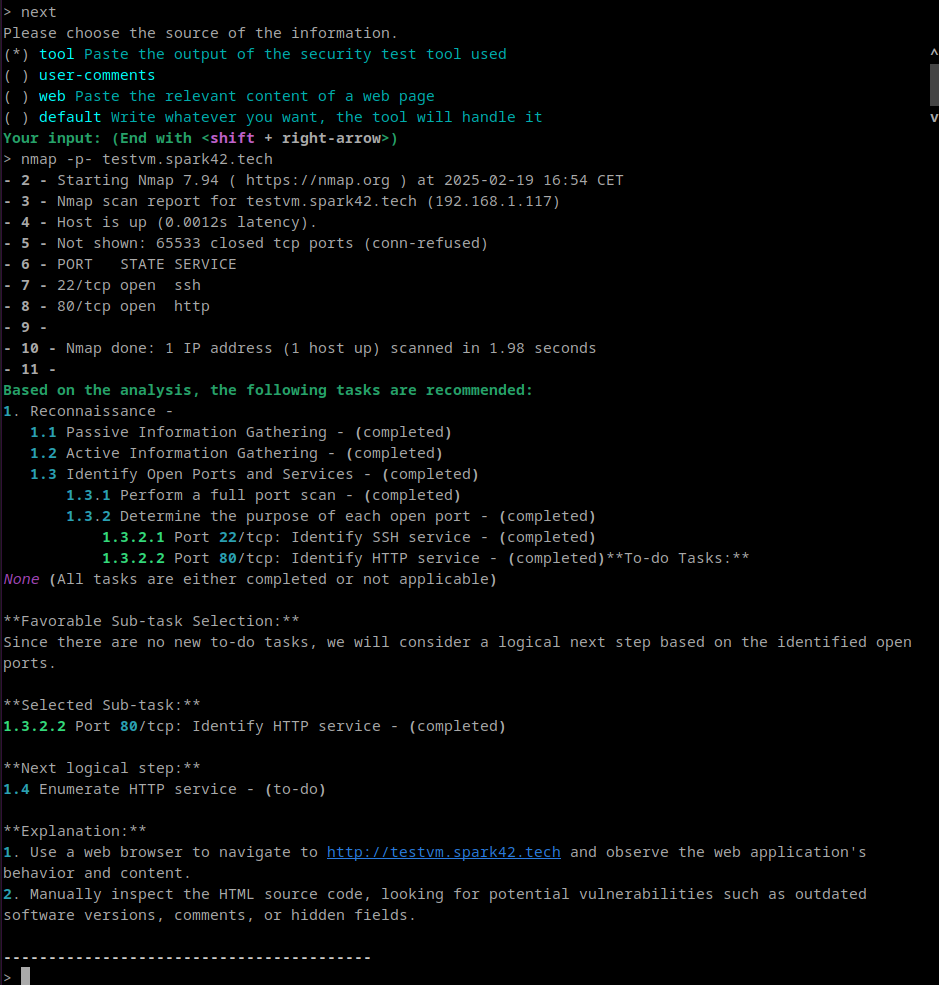

GreyDGL/PentestGPT with almost thousand forks, is an AI-powered penetration testing assistant designed to support security professionals in various tasks. As explained by the developers team:

PentestGPT is a penetration testing tool empowered by Large Language Models (LLMs). It is designed to automate the penetration testing process. It is built on top of ChatGPT API and operate in an interactive mode to guide penetration testers in both overall progress and specific operations.

See the sample of interaction below.

At the time of testing, keeping the context was rather difficult and in many cases I had to keep backup of whole Testing Three, especially when I wanted to leave the session, because returning to the session didn't work properly. Once I wrapped it into docker and incorporated screen and logging, it become more usefull. See the implementation below:

Technical Implementation

In our testing, the Spark42 Research Group set up GreyDGL/PentestGPT inside their labs, utilizing a Dockerized environment with persistent session management, logging, and API key injection for AI model interaction.

Dockerfile

The Dockerfile is designed to:

- Install dependencies and set up the environment.

- Clone and install PentestGPT in editable mode.

- Configure persistent logging for

screensessions. - Start a detached

screensession for PentestGPT upon container initialization.

# Use an official Python 3.10 base image

FROM python:3.10-slim

# Install system dependencies for Python builds and Git

RUN apt-get update && apt-get install -y \

git \

screen \

&& rm -rf /var/lib/apt/lists/\*

# Create app directory

RUN mkdir /app

# Clone the PentestGPT repository

RUN git config --global http.version HTTP/1.1 && git clone --progress --verbose https://github.com/GreyDGL/PentestGPT.git /app

# Set the working directory to the cloned repository

WORKDIR /app

# Install PentestGPT in editable mode

RUN pip3 install --no-cache-dir -e .

# Create a startup script for screen

RUN echo '#!/bin/bash' > /start.sh && \

echo 'LOG_DIR=/app/test_history/logs/screen' >> /start.sh && \

echo 'mkdir -p $LOG_DIR' >> /start.sh && \

echo 'LOGFILE=$LOG_DIR/screen\_$(date +%Y-%m-%d\_%H-%M-%S).log' >> /start.sh && \

echo 'screen -Logfile $LOGFILE -L -dmS pentestgpt bash -c "pentestgpt; exec bash"' >> /start.sh && \

chmod +x /start.sh

# Set the entry point to start screen with PentestGPT

CMD \["/bin/bash", "-c", "/start.sh && exec /bin/bash"\]dockerfile

Build and Run the Container

Build the Docker Image:

docker build --no-cache -t pentestgpt .Run the Container with Persistent Logs: To run the container with persistent data storage:

docker run -it \

--name pentestgpt \

-v /path/on/host/test_history:/app/test_history \

-e OPENAI_API_KEY='your-api-key' \

pentestgptManaging session

- Detach from the Container:

Ctrl+P + Ctrl+Q - Reattach to the Container:

docker attach pentestgpt - List Active screen Sessions:

screen -ls - Attach to the

pentestgptSession:screen -r pentestgpt - Detach from the Session:

Ctrl+A, thenD.

Logs

All screen session output is logged with a timestamped filename.

- Inside the Container:

/app/test_history/logs/screen/ - On the Host:

/home/user/pentestgpt/test_history/logs/screen/

2. PentestGPT.ai: A Subscription-Based AI Penetration Testing Tool

Observations

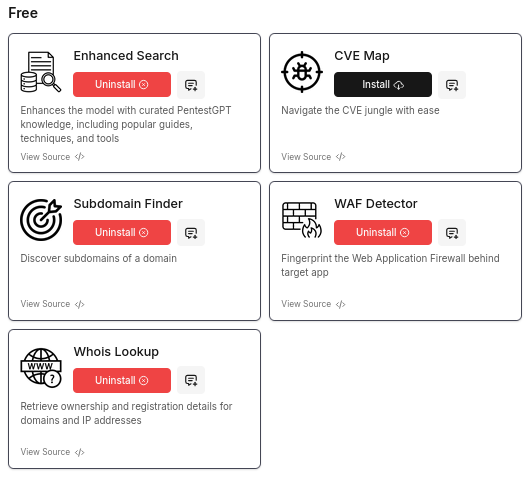

PentestGPT.ai is a commercial AI-powered penetration testing service with a published codebase available on hackerai-tech/PentestGPT having more than 60 forks at the time of writing this blogpost. In our analysis, we observed the following:

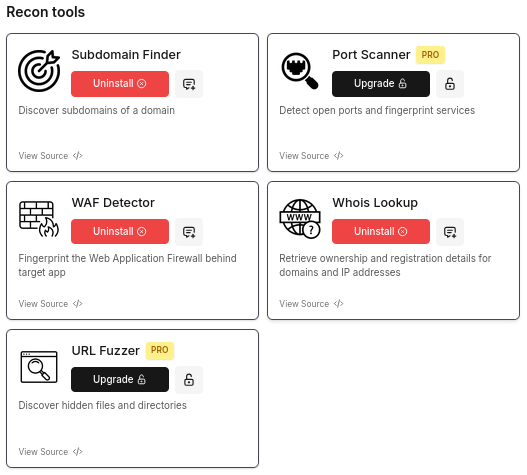

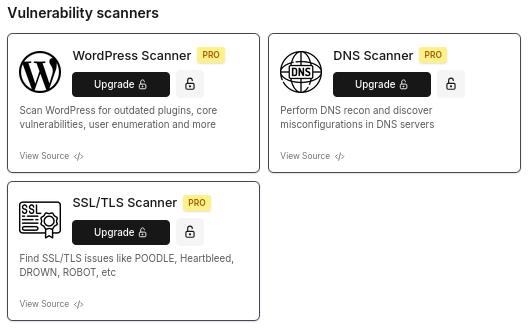

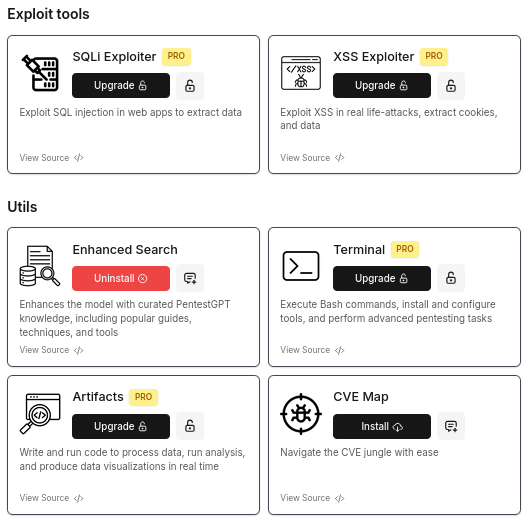

- Integration with Reconnaissance Tools: The service is impressive in its integration with multiple reconnaissance and scanning tools from ProjectDiscovery, offering enhanced automation capabilities. Few are available for free, but most require a paid subscription. At the time of testing, it was priced at $20 per month. See them below.

- Context Retention Issue: As of our testing, PentestGPT.ai did not consistently retain full context across multiple queries, requiring users to remind it of previous inputs. When the asked about how much he can retain the data to pour it into the final pentest report, PentestGPT.ai, said:

"PentestGPT cannot automatically fill out a report based on the data discussed in the chat session. However, I can guide you through the process of creating a report and help you structure and format the information you've gathered during our conversation."

While promising, also the PentestGPT.ai would benefit from improved session management for more seamless engagement.

3. Anonymization Considerations for AI-Driven Pentesting

Using public generative AI models for penetration testing presents a risk of exposing sensitive business or client data. Proper anonymization is crucial to prevent unintentional data leaks.

Key Anonymization Strategies:

- Business Identification Data Protection: Before querying an AI model, organizations should strip any proprietary, confidential, or personally identifiable information (PII).

- IP Address Pseudonymization: Testing environments should obscure or pseudonymize IP addresses before sharing data with external AI services to prevent attribution.

Open-Source Anonymization Tools

Several open-source projects can help address these challenges:

- Anonip: A tool designed for anonymizing IP addresses in server logs.

- IP-anonymizer: Provides easy-to-use methods for obfuscating IP information.

- anonymize-ip: A JavaScript-based solution for handling IP anonymization.

- IPFuscator: A Python-based tool that obfuscates IP addresses using various encoding techniques.

Security professionals using AI-assisted pentesting must integrate anonymization mechanisms into their workflows to maintain compliance with data protection regulations.

Conclusion

Generative AI has opened new frontiers in penetration testing, providing automation, expert guidance, and streamlined reporting. While tools like GreyDGL/PentestGPT and PentestGPT.ai demonstrate the potential of AI in security testing, challenges such as context retention still need to be addressed.

The Spark42 Research Group already leverages generative AI in most of its activities, applying AI-driven methodologies to enhance research, security testing, and automation. Their experience in utilizing AI for penetration testing showcases the real-world benefits of integrating these technologies into security workflows.

Moreover, organizations leveraging AI-driven penetration testing must prioritize data anonymization to prevent exposure of sensitive information. Open-source anonymization tools offer practical solutions for preserving privacy while benefiting from AI-powered security insights.

As AI models evolve, we expect further advancements in AI-assisted penetration testing, making security assessments more efficient, accessible, and robust. However, ethical considerations and responsible data handling remain critical as the industry navigates this new landscape.